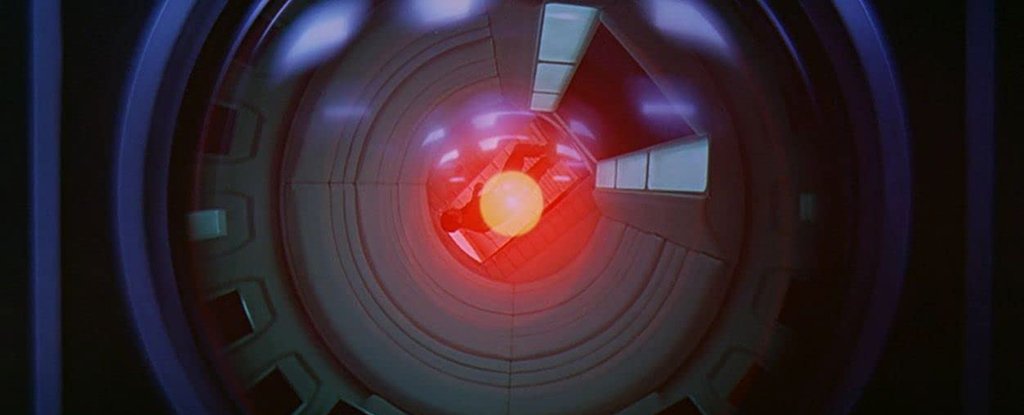

The idea of artificial intelligence overthrowing humanity has been talked about for many decades and scientists have just dictated the verdict on whether we would be able to control high-level computer superintelligence. The answer? Almost definitely not.

The problem is that controlling a superintelligence far beyond human understanding would require a simulation of that superintelligence that we can analyze. But if we are not able to understand it, it is impossible to create such a simulation.

Rules such as “do not cause harm to humans” cannot be established if we do not understand the type of scenarios with which an AI will be presented, the authors of the new article suggest. Once a computer system works at a level beyond the reach of our programmers, we will no longer be able to set limits.

“A superintelligence poses a fundamentally different problem from those normally studied under the banner of robot ethics,” the researchers write.

“This is because superintelligence has multiple facets and is therefore potentially capable of mobilizing a diversity of resources to achieve goals that are potentially incomprehensible to humans, let alone controllable.”

Part of the team’s reasoning comes from the stop problem posed by Alan Turing in 1936. The problem focuses on whether or not a computer program will come to a conclusion and answer (hence, it stops), or it just loops to always try to find them.

As Turing demonstrated by some clever mathematics, although we can know that for some specific programs, it is logically impossible to find a way to let us know for all potential programs that could never be written. This brings us back to AI, which in a superintelligent state could feasibly contain all possible computer programs in memory at once.

Any program written to stop AI harming humans and destroying the world, for example, may or may not come to a conclusion (and stop): it is mathematically impossible for us to be absolutely sure anyway, which means we cannot hold in.

“It actually makes the containment algorithm unusable,” says computer scientist Iyad Rahwan of the Max-Planck Institute for Human Development in Germany.

Researchers say the alternative to teaching a little ethics to AI and telling it not to destroy the world, which no algorithm can do with complete certainty, is to limit the capabilities of superintelligence. It can be cut from parts of the Internet or certain networks, for example.

The new study also rejects this idea, suggesting that it would limit the scope of artificial intelligence; the argument says that if we don’t use it to solve problems out of human reach, why create it?

If we move forward with artificial intelligence, we may not even know when superintelligence gets out of our control, such is its incomprehensibility. This means we need to start asking serious questions about the directions we are going.

“A super-intelligent machine that controls the world sounds like science fiction,” says computer scientist Manuel Cebrian of the Max-Planck Institute for Human Development. “But there are already machines that perform certain important tasks independently without programmers fully understanding how they learned it.”

“Therefore, the question arises as to whether this could at some point become uncontrollable and dangerous to humanity.”

The research has been published in Journal of Artificial Intelligence Research.