The social media company on Wednesday announced testing of a new feature called “Safe Mode,” which aims to help users prevent harmful tweets and unwanted responses and mentions from being overwhelmed. The feature will temporarily block the interaction of accounts with users to whom they have sent harmful language or repeated, uninvited replies or mentions.

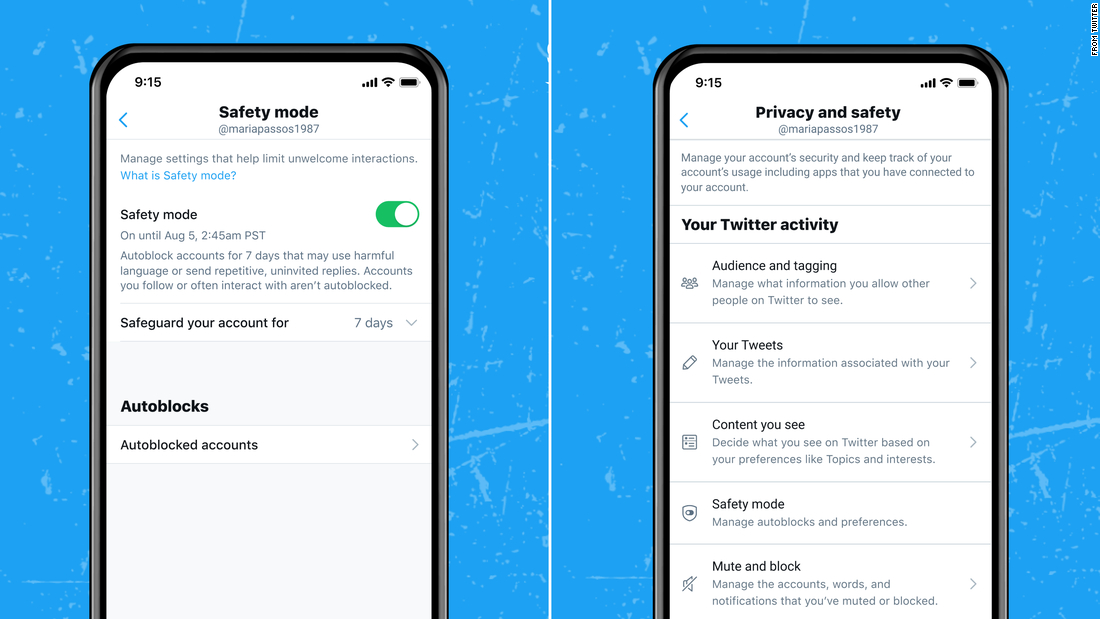

With the Security Mode tool, when a user activates it in the settings, Twitter systems will evaluate the “content of the incoming tweet and the relationship between the author of the tweet and the respondent.” If the automated Twitter system finds an account that has repeated and harmful interaction with the user, it will block the account for seven days to follow the user’s account, view their tweets, or send them direct messages.

Twitter spokeswoman Tatiana Britt said the platform does not proactively send notifications to let users know they have been blocked. However, if the offender browses the user’s page, they will see that “Twitter has automatically blocked them” and that the user is in safe mode, he said.

The company claims that its technology takes into account existing relationships to avoid blocking accounts with which a user often interacts and that users can review and change blocking decisions at any time.

For now, the security mode is just a limited test, deploying Wednesday to “a small group of user comments” in English on iOS, Android and Twitter.com, which includes “people from marginalized communities and journalists.”